Thanks to opsgility (original post).

For the new AzureRM (resource manager model) please check the official: Get started with Azure PowerShell cmdlets.

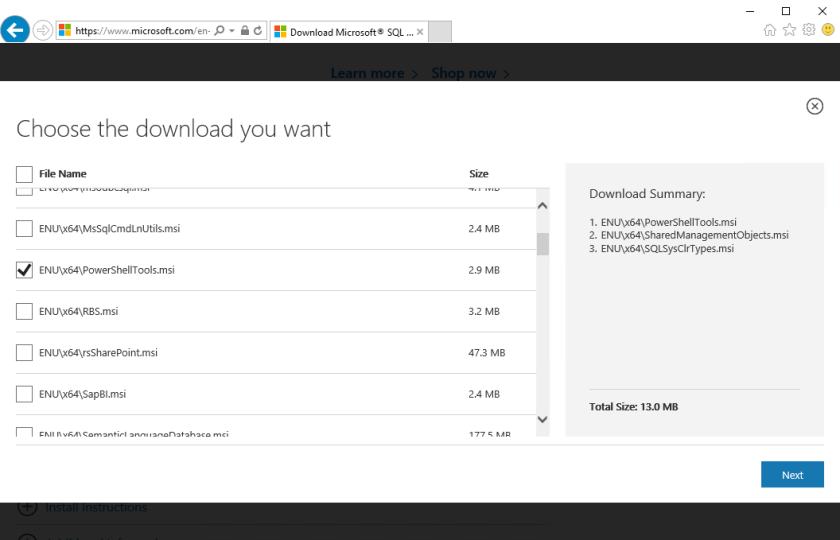

Download the Azure PowerShell Cmdlets

Windows install that you can also found at Microsoft Azure Downloads

Configure your Azure Subscription with the Azure PowerShell Cmdlets.

The simplest way to access your Azure subscription from PowerShell is to use the Add-AzureAccount cmdlet.

Add-AzureAccount

After executing the cmdlet a dialog will appear to prompt you to login with your Microsoft or Organization account. After you login, you will have access for 12 hours before you have to login again.

Enumerating and selecting a subscription

You can use the PowerShell cmdlets to enumerate and view your current subscription settings.

Here are some of the more handy ones to know about:

# Enumerates all configured subscriptions on your local machine.

Get-AzureSubscription

# Returns details only on the specified subscription

Get-AzureSubscription -SubscriptionName "mysubscription"

# Select the subscription to use

Select-AzureSubscription -SubscriptionName "mysubscription"

# Sets the mysub subscription to be the default if one is not selected.

Set-AzureSubscription -DefaultSubscription "mysub"

CONFIGURING STORAGE WITH THE CURRENTSTORAGEACCOUNT PARAMETER

When using the WA Cmdlets with Virtual Machines (IaaS) or Cloud Services (PaaS) you will need to specify the CurrentStorageAccount for your subscription. This is basically the storage account that will be used for creating VHDs or uploading .cspkg files. For virtual machines this storage account has to be in the same datacenter that you plan on creating virtual machines in.

Set-AzureSubscription -SubscriptionName "mysub" -CurrentStorageAccount "mystorageaccount"

To discover if you have a storage account or create a new storage account from PowerShell:

# Discover whether you have a storage account already

Get-AzureStorageAccount

# Creates a new storage account in the West Europe data center

New-AzureStorageAcount -StorageAccountName "mystorageaccountname" -Location "West Europe"

Which of course begs the question – how do I know which data centers are available?

The following cmdlet will give you that information:

Get-AzureLocation

This is everything you need to configure the Azure PowerShell cmdlets for your subscription!